Face recognition for superimposed facemasks using VGGFace2 in Keras

Perform face recognition of faces with superimposed facemasks using VGGFace2 in Keras. This post forms part of the continued work from the previous article - Facial mask overlay with OpenCV-dlib.

In this article, we will attempt to perform face detection on the “masked” face that has been generated using OpenCV and dlib library using MTCNN (Multi-task Cascaded Convolutional Networks). Thereafter, we will perform face recognition tests on the “masked” face using VGGFace2 in Keras.

We will also be testing our “masked” faces using Deep Learning model to determine if our “masking” of faces is successful. However, that will be in another post.

Face Recognition Definition

Face recognition is the process of identifying and verifying people from images of their face. According to “Handbook of Face Recognition”, there are two main modes in face recognition — Face Verification and Face Identification.

- Face Verification. A one-to-one mapping of a given face against a known identity

- Face Identification. A one-to-many mapping for a given face against a database of known faces

Face Detection using MTCNN

However, prior to face recognition, we need to detect faces. Face detection is an important and essential stage for a face recognition pipeline. As mentioned in the previous article, face detection can be performed in a variety of ways. In this post, we will use MTCNN or Multi-task Cascaded Convolutional Networks to perform face detection on our “masked” faces. It is a modern deep learning model for face detection, described in the 2016 paper titled “Joint Face Detection and Alignment Using Multitask Cascaded Convolutional Networks”. It is a strong face detector that offers high detection scores.

Understanding Multi-task Cascaded Convolutional Networks

MTCNN (Multi-task Cascaded Neural Network) is one of the deep learning methods used to detect faces and facial landmarks on images and videos. It is a framework developed as a solution for both face detection and face alignment. MTCNN is a popular technique in face detection because not only it is able to achieve then state-of-the-art results on a range of benchmark datasets, it is also capable of also recognizing other key facial features such as eyes and mouth, which we termed as facial landmark detection. Refer to my previous post on the brief description on facial landmark.

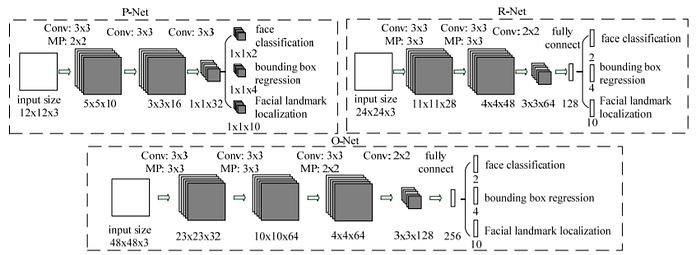

The network uses a cascade structure with three networks; first the image is rescaled to a range of different sizes (called an image pyramid), then the first model (Proposal Network or P-Net) proposes candidate facial regions, the second model (Refine Network or R-Net) filters the bounding boxes, and the third model (Output Network or O-Net) proposes facial landmarks. Figure 1 presents the architecture of MTCNN. Figure 2 shows the pipeline of the cascaded framework that includes three-stage multi-task deep convolutional networks.

In this post, we will be using the implementation provided by Iván de Paz Centeno in the ipazc/mtcnn project. This can be installed via pip. (Refer to installation section below)

Getting started

To start using the script, clone the repository with the link at the end of this post.

Install the required packages

It is advisable to make a new virtual environment with Python 3.7 and install the dependencies to perform facial detection using MTCNN. The other libraries for face recognition will be installed as necessary at a later stage.

# requirements.txtnumpy == 1.19.2

keras == 2.3.1

matplotlib >= 3.3.2

pillow >= 7.2.0

tensorflow == 2.0.0

mtcnn == 0.1.0

opencv-python == 4.4.0.44

pip >= 20.2.2

python >= 3.7.9

As MTCNN requires the usage of specific versions of Tensorflow and Keras and other peripheral libraries, it is critical to follow the specific steps in installing the relevant libraries as per this link.

Importing libraries

We will start by importing the necessary libraries required: numpy, os, matplotlib, pillow and mtcnn.

The next step is to set up the directory and path from which the images are to be imported. The known directory will contain all the known faces where they are labelled. This directory will be used in the subsequent section in face verification.

We will create an MTCNN face detector class. This detector will be used to detect all faces in the loaded images and extract the faces for subsequent use with the VGGFace face detector models in later sections.

We will proceed to define a function extract_face_from_image() that will load a image from the loaded filename and return the extracted face. This function will detect and return a list of detected faces. extract_face_from_image() function will be used in the later stage where we need to do comparison of each face detected with a known dataset.

The result from faces (line 10) is a list of bounding boxes, where each bounding box defines a lower-left-corner of the bounding box, as well as the width and height. Lines 14–18 defines the pixel coordinates of the bounding boxes where they are used in extracting the faces detected. Lines 23 — 27 defines the use of PIL library to resize the extracted image of the face to the required size: In our case specifically, the model expects square input faces with the shape 224×224.

The next 3 functions — highlight_faces(), draw_faces() and print_faces() which we are defining will be used in the face detection via MTCNN.

highlight_faces() function draws red bounding boxes or rectangles for each of the faces detected in an image.

draw_faces() function will extract the detected faces and plot each of the faces separately.

The main intent of print_faces() function is to print out the number of faces detected and subsequently perform the highlight_face() function.

Results

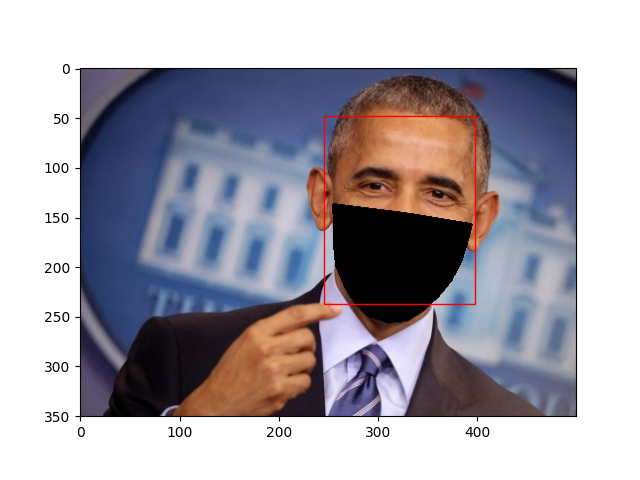

Using print_faces() and draw_faces() functions on our test images — Obama and the famous Ellen’s wefie shot yield the following results — See Figures 3 and 4. Both of the test images have superimposed facemasks drawn using our script from my previous post — Facial mask overlay with OpenCV-dlib.

From the results obtained, it is evident that MTCNN detector is successful in detecting and extracting both masked faces and unmasked faces that are occluded. MTCNN is unable to detect only one “masked” face and also return two faces in one of the detections for “masked” faces as shown in Figure 4. The results suggest that we can use these functions to detect and extract the “masked” faces as inputs into the VGGFace face recognition model in subsequent sections.

Figures 5 to 7 provides further examples on running this script on the 3 celebrity images [source] with the synthetic mask face generated using OpenCV-dlib.

With the successful detection and extraction of “masked” faces using MTCNN, we can proceed to perform face recognition using VGGFace2 in Keras. As mentioned in the earlier section, there are 2 modes of recognition — face identification and face verification.

Perform Face Identification With VGGFace2

In this section, we will use the VGGFace2 model to perform face recognition with images of “masked” celebrities. Before we dive into the codes, we will need to understand what is VGGFace2.

Understanding VGGFace2

The dataset contains 3.31 million images of 9131 subjects (identities), with an average of 362.6 images for each subject. The whole dataset is split to a training set (including 8631 identities) and a test set (including 500 identities).

However, VGGFace2 has become synonymous to the pre-trained models for face recognition that are trained on this dataset.

There are variations of models are trained on the dataset, specifically a ResNet-50 and a SqueezeNet-ResNet-50 model (called SE-ResNet-50 or SENet). These models can be downloaded along with the associated codes via this link. These models are evaluated on standard face recognition datasets, demonstrating then state-of-the-art performance. It is also mentioned that the SqueezeNet-based model offers better performance in general.

It must be noted that both the models and the pre-trained models for VGGFace2 are not directly useable with TensorFlow or Keras libraries. A conversion is required to use them with TensorFlow or Keras. It must be highlighted that this conversion work has already been completed and can be used directly by third-party projects and libraries. The VGGFace2 (and VGGFace) models that we are using in Keras is the keras-vggface project and library by Refik Can Malli.

Installing keras-vggface library for face recognition

This library can be installed via pip:

# Most Recent One (Suggested)

pip install git+https://github.com/rcmalli/keras-vggface.git

# Release Version

pip install keras_vggfaceOther dependencies can also be installed via pip:

keras-applications == 1.0.8

keras-preprocessing == 1.1.0Importing libraries and defining functions

We will proceed to import the necessary libraries and defining new functions for face identification using VGGFace2.

from keras_vggface.utils import preprocess_input

from keras_vggface.utils import decode_predictions

from keras_vggface.vggface import VGGFaceAs mentioned earlier, face identification is a a one-to-many mapping for a given face against a database of known faces. We will be required to extract only a single face from an image containing one person.

Similar to the function extract_face_from_image() that will load a image from the loaded filename and return a list of extracted faces, extract_face() function will detect and return a single face. The extracted face will be resized to input size required by the VGGFace2 model using the PIL library. This extracted face will then be passed as input to model_pred() function where it will be used to do comparison from the list of 8,631 identities in the MS-Celeb-1M dataset.

model_pred() function loads and prepares the extracted face into the pre-trained model which is used to predict the probability of a given face belonging to one or more of more than eight thousand known celebrities. However, before we can make a prediction with a face, the pixel values must be scaled in the same way that data was prepared when the VGGFace model was fit. Specifically, the pixel values must be centered on each channel using the mean from the training dataset. This is achieved using the preprocess_input() function provided in the keras-vggface library and specifying the ‘version=2’ so that the images are scaled using the mean values used to train the VGGFace2 models instead of the VGGFace1 models (the default).

The pretrained model is created using the VGGFace() constructor and specifying the type of model to create via the ‘model‘ argument. See Line 13. The keras-vggface library provides three pre-trained VGGModels, a VGGFace1 model via model=’vgg16′ (the default), and two VGGFace2 models ‘resnet50’ and ‘senet50’. In our case, we use VGGFace2 and we will have the option to select either ‘resnet50’ or ‘senet50’. In this post, we will use ‘senet50’. This Keras model will be used directly to predict the probability of a given face belonging to one or more of more than eight thousand known celebrities.

A face embedding is predicted by the given model as a 2,048 length vector. The length of the vector is then normalized, e.g. to a length of 1 or unit norm using the L2 vector norm (Euclidean distance from the origin). This is referred to as the ‘face descriptor’. The distance between face descriptors (or groups of face descriptors called a ‘subject template’) is calculated using the Cosine similarity. The closer the cosine similarity score, the higher the probability of a match.

Once a prediction is made, the class integers are mapped to the names of the celebrities, and the top five names with the highest probability can be retrieved via the decode_predictions() function in the keras-vggface library.

We define decoder() function that takes in decode_predictions() function and prints out the top five probabilities.

We will test the model with 3 celebrities — Matt Damon, Michael B.Jordan and Oprah Winfrey. These celebrities are in the list of 8,631 identities in the MS-Celeb-1M dataset.

Sources of our images: Matt Damon (Wikipedia), Michael B. Jordan (Wikipedia) and Oprah Winfrey (The Guardian)

We will need to superimpose synthetic facemasks using our script before running the face_identity.py on both the original and “masked” faces.

Results of face recognition

After running the face_identity.py script on each of the test images of the original and “masked” faces individually, the results are as shown below:

From the results, we can observe that the addition of the synthetic face mask decreases the confidence level of face recognition to various degrees. The pre-trained model (senet50) is able to identify the masked faces for all 3 celebrities but with various degrees of confidence level. This is especially evident in the case of Oprah Winfrey where the confidence level decreases dramatically with “mask on” from 98.8% to a low percentage of 6.8%. Compared to “masked” Matt Damon and “masked” Michael B. Jordan, where the confidence level decreases by 10% and 40% respectively, the model is least confident in predicting the identity of Oprah Winfrey when she has a “face mask” on.

This also shows that the script in my article — Facial mask overlay with OpenCV-dlib can be another alternative in creating image datasets of people with face masks that can be used to train and evaluate facial recognition systems.

Perform Face Verification With VGGFace2

In this section, we will use a VGGFace2 model for face verification. As mentioned earlier, face verification is a one-to-one mapping of a given face against a known identity. We will be required to extract all the faces from an image and compare with another image with known identity.

This involves calculating a face embedding for a test unknown face and comparing the embedding to the embedding for the face known to the system.

A face embedding is a vector that represents the features extracted from the face. This will be used to compare with the vectors generated for other faces. Similar to the face embedding predicted by the given model, when two vectors are close (by some measure), this shows that the two faces may be the same person; on the other hand, when two vectors are far apart (by some measure), it will show that the two faces may be different (in terms of identity).

Typical measures such as Euclidean distance and Cosine distance are calculated between two embeddings and faces are said to match or verify if the distance is below a predefined threshold, often tuned for a specific dataset or application.

Importing libraries and defining functions

The libraries necessary are the same as face identification. The additional libraries that will be necessary are: glob and the cosine function from SciPy to compute the distance between two faces.

#Additional libraries necessary in face verification

import glob

from scipy.spatial.distance import cosineNext, we will be defining additional functions for face verification — get_model_scores() and compare_face().

get_model_scores() function takes the extracted faces as inputs and returns the computed model scores. The model returns a vector, which represents the features of one or more faces. Similar to model_pred() function, the inputs are prepared using the preprocess_input() function provided in the keras-vggface library and specifying the ‘version=2’ so that the images are scaled using the mean values used to train the VGGFace2 models instead of the VGGFace1 models (the default).

We load the VGGFace model without the classifier by setting the ‘include_top‘ argument to ‘False’, specifying the shape of the output via the ‘input_shape’ and setting ‘pooling’ to ‘avg’ so that the filter maps at the output end of the model are reduced to a vector using global average pooling. This model will then be used to make a prediction, which will return a face embedding or vector for one or more faces provided as input.

Since the model scores for each face are vectors, we need to find the similarity between the scores of two faces. As mentioned earlier, we will use a Cosine function to calculate the similarity since vector representation of faces are suited to the cosine similarity. The cosine function computes the cosine distance between two vectors. The lower this number, the better match the two faces are. In our case, we’ll put the threshold (thres_cosine) at 0.5 distance. This threshold value can change and would vary with different use case. Depending on the dataset, this threshold value can be fine tuned based on the application. The maximum distance between two vectors or embeddings is a score of 1.0 which implies the faces are totally different; whereas the minimum distance is 0.0 which means the faces are identical. A typical cut-off value used for face identity is between 0.4 and 0.6.

compare_face() function takes in the vectors of two images as inputs and compares the cosine distance. It returns the score and display the two faces if they are found to be similar based on the cosine score.

The last section of the face_verification.py script is a loop when executed, will go through all the known faces in a library (dataset) and compare the detected faces in a test image with these known faces.

We will run the script with a test image of Obama and Joe Biden with thres_cosine = 0.5. Similar to the previous section, we will need to superimpose synthetic facemasks using our script before running the face_verification.py on both the original and “masked” faces.

Figure 12 shows the original test image and image with superimposed facemasks on the detected faces of Obama and Joe Biden. A table showing the comparison of the results for the two test images is shown with thres_cosine value = 0.5

Results of face recognition

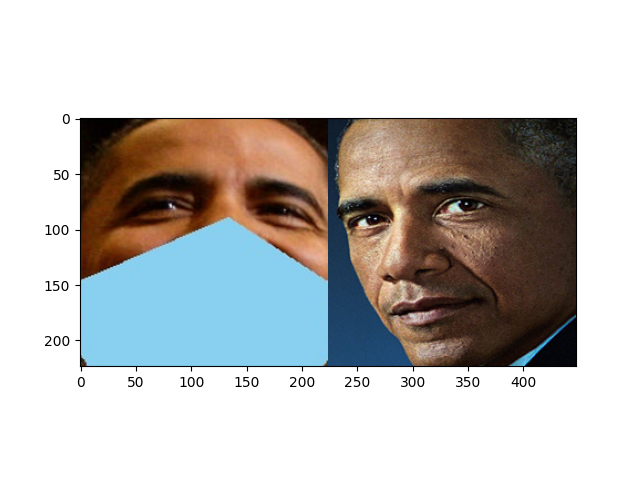

From our results above, the model (‘resnet50’) is able to perform compare and verify correctly with high accuracy for both identities of Joe Biden and Obama in the original image (left image in Figure 12) with those images in the known database. We have 3 images of Joe Biden and 4 images of Obama in our known database. The accuracy is 67% for Joe Biden and 100% for Obama.

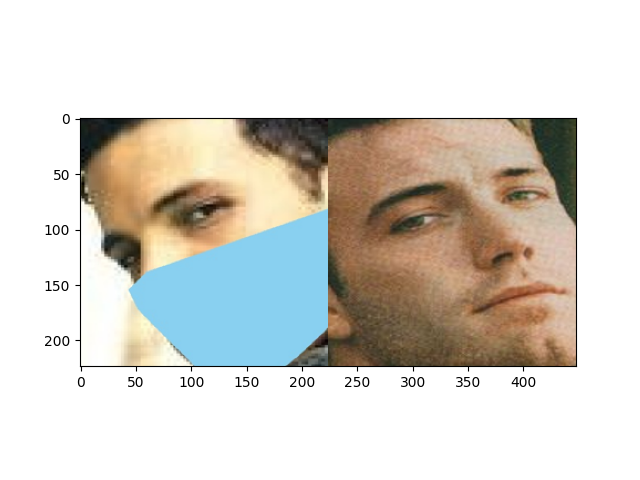

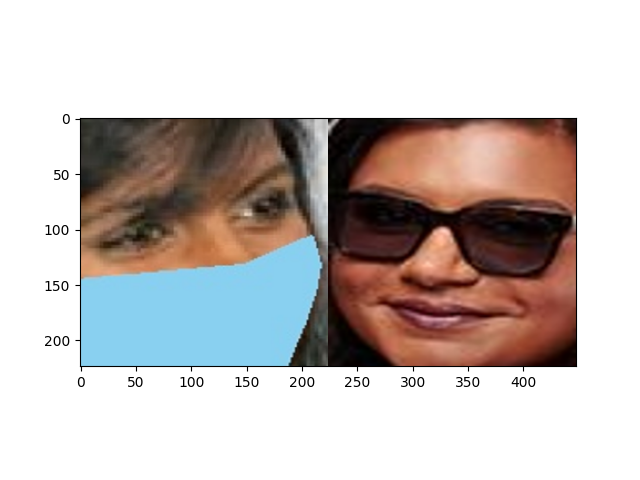

However, when we superimposed facemasks on the detected faces (right image in Figure 12), the accuracy of the model drops for both Joe Biden and Obama by 33% and 25% respectively. The model is only able to compare and verify correctly one out of three images of Joe Biden in the known database, and three out of four images of Obama in the known database. Figure 13 and 14 shows the subplots where the matched faces are displayed together as one subplot. Left image of the subplot is the extracted face from the test image (masked face) while the right image is the extracted face from the known database.

We can observe that similar to the ‘senet50’ model used in face identification, this model ‘resnet50’ is also able to detect and verify masked faces fairly accurately with a threshold value = 0.5.

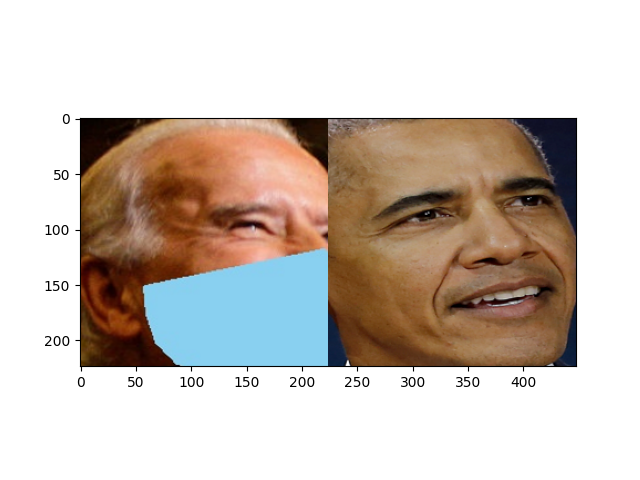

However, when threshold value of 0.6 is used, we will yield a false positive on the match between ‘masked’ Joe Biden and Obama. Figure 15 shows the extracted matched faces of test image of masked Joe Biden and masked Obama with a known face of Obama in the database directory. The results of the comparison scores are also shown.

Comparing image… /database\Obama (1).jpeg

there is a match! 0 0 0.48499590158462524

there is a match! 1 0 0.5521898567676544

From the results, the scores for both image matches are below the threshold value of 0.6. Hence it must be highlighted that there are additional factors such as emotions of the face and face angles that will also affect the accuracy in face verification system. The threshold value should thus be fine-tuned to match specific applications.

Using the same model, we will perform face verification on the 3 celebrity images [source] with the synthetic mask face generated using OpenCV-dlib — Refer to Figure 5 to 7. The threshold value that is used for this case is 0.6. We obtained this value after several runs of experimentation to avoid false positives in our results.

Images in the known directory or database are selected from the same source as the test images of the celebrity images.

The results are shown in Figures 16 to 18. The tables that follows show the results between the original unmasked face and masked face.

It can observed that the model accuracy in face verification drops by 50%, 25% and 33% for masked faces of Ben Affleck, Elton John and Mindy Kaling respectively. As highlighted earlier and observations from the results above, there is no universal threshold that would match two images together. It is necessary to re-define or fine-tune the threshold value as new data comes into the analysis. It is also observed that in addition to the synthetic face masks, the emotions of the faces and the angle of the faces are also factors determining the score and hence the accuracy of the model.

Conclusion

In this post, we have successfully detected the “masked” face that has been generated using OpenCV and dlib library using MTCNN (Multi-task Cascaded Convolutional Networks) and highlighted them in the images to determine if the model worked correctly. Thereafter, we perform face recognition tests — face identification and face verification on the “masked” face using VGGFace2 in Keras. The VGGFace2 algorithm to extract features from faces in the form of a vector and matched different faces to group them together.

In face identification of masked face of celebrities, the pre-trained model (senet50) is able to identify the masked faces for 3 celebrities but with various degrees of confidence level.

Performing face verification to confirm the identity of a person given a photograph of their “masked” faces using VGGFace2 model is possible with the pre-trained model (‘resnet50’) with fine-tuning of threshold value. Other factors such as emotions of the face and face angles will also affect the accuracy in face verification system.

The results also show that the script in my article — Facial mask overlay with OpenCV-dlib used can provide another alternative solution to create image datasets of people with face masks that can be used to train and evaluate facial recognition systems.

You can download my complete codes here: https://github.com/xictus77/Maskedface_verify.git

References:

2. How to Perform Face Detection with Deep Learning

3. Face Detection Using MTCNN (Part 2)

4. Stan Z. Li and Anil K. Jain. 2011. <i>Handbook of Face Recognition</i> (2nd. ed.). Springer Publishing Company, Incorporated.

5. Sources of images — open source and https://www.kaggle.com/dansbecker/5-celebrity-faces-dataset