Facial mask overlay with OpenCV-dlib

Superimpose facemasks using OpenCV-dlib library

Face masks have been shown to be one of the best defense against the spread of COVID-19. However, this has also led to the failure of facial recognition algorithms which are built around facial features including nose, mouth and jawline. Before the global pandemic, facial recognition systems verify faces in two images by performing comparison measurements between different facial features detected. The wearing of a mask over a person’s nose, mouth and cheeks, has greatly reduced the information normally used to figure out his/her identity.

There will be a need to re-train or re-design efficient recognition systems to recognize masked faces in regulated areas. In order to do this, there is a need for a large dataset of masked faces for training deep learning models towards detecting people wearing masks and those not wearing masks. Currently, there are limited image datasets of people with face masks that are usable to train and evaluate facial recognition systems. It is reported that the US National Institute of Standards and Technology (NIST) study addressed this issue by superimposing masks (of various colours, sizes and positions) over images of unmasked faces. [source]

This post attempts to replicate this process using OpenCV and dlib library where we synthetically generate 5 types of face masks to be drawn on face images. Figure 1 shows the 5 types of face masks generated.

We will also perform tests or validation on the “masked” face using one of the deep learning method — MTCNN (Multi-task Cascaded Convolutional Networks) in another post.

Getting started

To start using the script, clone the repository with the link at the end of this post.

Install the required packages

It’s advisable to make a new virtual environment with Python 3.7 and install the dependencies. The libraries required are as stated below:

#requirements_facemask.txt

numpy == 1.18.5

pip == 20.2.2

imutils == 0.5.3

python >=3.7

dlib == 19.21.0

cmake == 3.18.0

opencv-python == 4.4.0As this script requires dlib library, it is critical to install dlib before starting to run the script. You can learn how to install dlib with Python bindings from the following link:

Dlib is an advanced machine learning library that was created to solve complex real-world problems. This library has been created using the C++ programming language and it works with C/C++, Python, and Java.

Importing libraries

We will start by importing the necessary libraries required to perform digital overlaying of face mask: OpenCV, dlib, numpy, os and imutils.

The next step is to initiate the colors of the face masks and also to set up the directory and path from which the images are to be imported. Note that the color space for OpenCV is in BGR order instead of RGB.

The following link let you explore the colour visually instantly. It can be used to do conversion of colors from hexadecimal to RGB and vice versa — https://www.rgbtohex.net/rgb/

Preprocess image

Next, we will load our input image via OpenCV, then pre-processes the image by resizing to have a width of 500 pixels and converting it to grayscale.

Detecting and extracting facial landmarks using dlib, OpenCV, and Python

In order to overlay face masks, we will need to perform face detection. There are many methods available to perform this task. We can use OpenCV’s built-in Haar Cascade XML files or even TensorFlow or using Keras. In this post, we are using dlib’s face detector.

Before we proceed further, it is important to understand how dlib’s face detector and facial landmark detection works. The frontal face detector in dlib is based on histogram of oriented gradients (HOG) and linear SVM.

We are using dlib’s frontal face detection to first detect face and subsequently detect facial landmarks using facial landmark predictor dlib.shape_predictor from dlib library.

Facial landmark detection is defined as a task in detecting key landmarks on the face and tracking them (being robust to rigid and non-rigid facial deformations due to head movements and facial expressions)[source]

What are Facial landmarks?

Facial landmarks are used to localize and represent salient regions of the face, such as eyes, eyebrows, nose, jawline, mouth, etc.

It is a technique that have been applied to applications like face alignment, head pose estimation, face swapping, blink detection, drowsiness detection, etc.

In the context of facial landmarks, it is necessary to detect the important facial structures on the face using shape prediction methods. Facial landmarks detection involves two steps:

- Localizing the face detected in the image.

- Detection of key facial structures on the face

As mentioned earlier, we can perform face detection in a variety of ways but every method essentially tries to localize and label the following facial regions:

- Nose

- Jaws

- Left and right eyes

- Left and right eyebrow

- Mouth

In this post, we used Deep Learning-based algorithms which are built for face localization. This algorithm will also be used in the detection of the faces in the image. We will also obtain face bounding box through some method where we use the (x, y) coordinates of the face in the image respectively. Once the face region is detected and bounded, we will proceed to the next step of detecting the key facial structures in the face region.

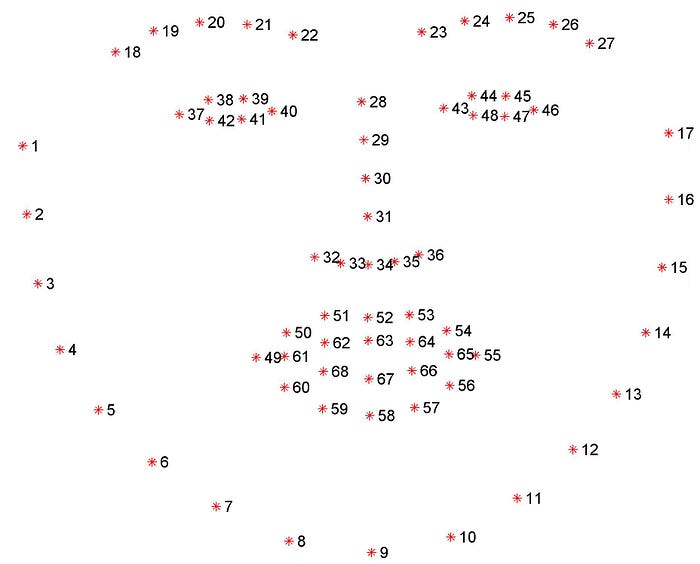

We are using the pre-trained facial landmark detector that is included in the dlib library. It is an implementation of the One Millisecond Face Alignment with an Ensemble of Regression Trees paper by Kazemi and Sullivan (2014) where it estimates the location of 68 (x, y)-coordinates that map to facial structures on the face. We can visualize these indexes of 68 coordinates or points using the image below:

From Figure 3, the locations of the facial features can be assessed via the different sets of points [start point, end point]:

- Left eye : points [42, 47]

- Mouth: points [48, 67]

- Left eyebrow: points [22, 26]

- Nose: points [27, 34]

- Right eyebrow: points [17, 21]

- Right eye: points [36, 41]

- Jawline: points [0, 16]

Note that the landmark points start from 0

These annotations are part of the 68 point iBUG 300-W dataset which the dlib facial landmark predictor was trained on.

Face Detection and facial landmark detection

The next step involves initializing dlib’s pre-trained face detector based on a modification to the standard Histogram of Oriented Gradients + Linear SVM method for object detection. This detector will handle the detection of the bounding box of faces in our image.

The first parameter to the detector is our grayscale image. (This method works with color images as well).

The second parameter is the number of image pyramid layers to apply when upscaling the image prior to applying the detector. The advantage of increasing the resolution of the input image prior to face detection is that it may allow us to detect more faces in the image. However, the disadvantage is that the larger the input image, the more computationally expensive and the slower the speed the detection process will be.

We will also print out the coordinates of the bounding boxes as well as the number of faces detected. We can also draw the bounding boxes using cv2 around the detected faces using a for loop.

In order to detect facial landmarks, we will need to download the facial landmark predictor dlib.shape_predictor from dlib library.

Our shape prediction method requires the file called “shape_predictor_68_face_landmarks.dat” to be downloaded and the file can be downloaded from the link: http://dlib.net/files/shape_predictor_68_face_landmarks.dat.bz2

It should be highlighted that this model file is designed for use with dlib’s HOG face detector only and should not be used with dlib’s CNN based face detector. The reason is that it expects the bounding boxes from the face detector to be aligned the way dlib’s HOG face detector does it.

The results will not turn out well when used with another face detector that produces differently aligned boxes, such as the CNN based mmod_human_face_detector.dat face detector.

Once the shape predictor is downloaded, we can initialize the predictor for subsequent use to detect facial landmarks on every face detected in the input image.

Once the facial landmarks are detected, we will then be able to start “drawing” / overlaying the facemasks on the faces by joining the required points using the Drawing Functions in OpenCV

Dlib Masking Methodology

The following step involves identifying the points required to drawing the different type of facemasks. The types of facemasks that we are replicating are defined by different sets of points mentioned in the NIST study paper Appendix A. See figure 4 for the visuals.

We will define the shape of the facemasks by connecting the landmark points as defined in the paper Appendix A. For example, to form wide and medium coverage mask, we will connect (draw) landmark points for jawline [0,16] with landmark coordinates of point 29.

The facemasks outline can be drawn using Drawing Functions in OpenCV for the ellipse and three other types of regular shaped masks. We can then use cv2.fillpoly function to fill the drawn facemasks with color.

The color and the type of the facemasks will be pre-determined by the user with selection prior to the start of image detection. We have pre-selected two colors for facemasks —blue and black in the user input function.

Results

Figure 5 shows the comparison between the original input image — an image of Barack Obama and the output images with the superimposed facemasks using the script. We are also able to use this script on group shots. See Figure 6 for the results of superimposing facemasks on the detected faces in the famous Ellen’s wefie shot.

We are able to successfully replicate the process of generating the 5 different types of facemasks (detailed in Appendix A) that can be superimposed over images of unmasked faces using dlib and OpenCV.

Figures 7 to 9 show more examples on running this script on faces not looking directly into the camera.

Conclusion

This script is able to generate a synthetic mask face on a face detected where output image can then be used to test or validate other application-oriented ML networks such as face recognition for indoor attendance system, mask detection etc.

You can download my complete codes here

https://github.com/xictus77/Facial-mask-overlay-with-OpenCV-Dlib.git

References:

1. Facial landmarks with dlib, OpenCV, and Python

3.Real-Time Face Pose Estimation

4. Drawing Functions in OpenCV

5. Sources of images — open source and https://www.kaggle.com/dansbecker/5-celebrity-faces-dataset